TURBO: Total-body PET analysis suite

Long axial field of view PET (Total-body PET) data analysis is complicated by the sheer number of target tissues, as well as variable uptake kinetics across tissues. Moreover, some tissues have uniform uptake kinetics (such as skeletal muscle), whereas other organs and regions (such as brain or kidneys) have significant spatial variation in uptake due to the biological composition of the tissue. Accordingly, a unified voxelwise modelling cannot be and applied across all tissues and organs. TURBO toolbox provides a unified approach for reproducible automated processing and modelling of total-body PET data with multiple ligands and kinetic models. TUBO implements automatic PET/CT coregistration and motion correction, CT-based tissue segmentation, image-based input determination, anatomically constrained voxelwise kinetic modelling for +100 tissues, data analysis, and quality control. All basic kinetic models (Patlak, FUR, SUV, SRTM etc) are supported. The compilation of TURBO code is site-independent and the system can be installed on any compatible Linux server. TURBO is developed and maintained by Jouni Tuisku and Santeri Palonen.

Long axial field of view PET (Total-body PET) data analysis is complicated by the sheer number of target tissues, as well as variable uptake kinetics across tissues. Moreover, some tissues have uniform uptake kinetics (such as skeletal muscle), whereas other organs and regions (such as brain or kidneys) have significant spatial variation in uptake due to the biological composition of the tissue. Accordingly, a unified voxelwise modelling cannot be and applied across all tissues and organs. TURBO toolbox provides a unified approach for reproducible automated processing and modelling of total-body PET data with multiple ligands and kinetic models. TUBO implements automatic PET/CT coregistration and motion correction, CT-based tissue segmentation, image-based input determination, anatomically constrained voxelwise kinetic modelling for +100 tissues, data analysis, and quality control. All basic kinetic models (Patlak, FUR, SUV, SRTM etc) are supported. The compilation of TURBO code is site-independent and the system can be installed on any compatible Linux server. TURBO is developed and maintained by Jouni Tuisku and Santeri Palonen.

If you use TURBO in your work, please cite the following paper: Tuisku, J., Palonen, S., Kärpijoki, H., Latva-Rasku, A., Tuomola, N., Harju, H., Nesterov, S., Oikonen, V., Iida, H., Teuho, J., Han, C., Karjalainen, T., Kirjavainen, A.K., Rajander, J., Klen, R., Nuutila, P., Knuuti, J., & Nummenmaa, L. (preprint). Automated Total-body PET Image Processing and Kinetic Modeling with the TURBO Toolbox. bioRxiv.

GIT: https://gitlab.utu.fi/human-emotion-systems-laboratory/turbo

Manual: https://turbo.utu.fi

MAGIA: Brain-PET data processing toolbox

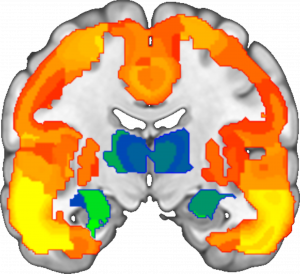

The MAGIA toolbox is designed for swift automated preprocessing and kinetic modelling of PET data with multiple ligands and models. MAGIA implements automatic image retrieval from PACS combined with functions from FreeSurfer and SPM image preprocessing pipelines coupled with in-house PET modelling and metadata annotation code, and computes parametric volumes as well as regional estimates based on FreeSurfer parcellations. Quality control is performed with in-house code and MRIQC toolbox. The compilation of MAGIA code is site-independent and can be installed anywhere. MAGIA was developed by Tomi Karjalainen and is currently maintained by Jouni Tuisku.

The MAGIA toolbox is designed for swift automated preprocessing and kinetic modelling of PET data with multiple ligands and models. MAGIA implements automatic image retrieval from PACS combined with functions from FreeSurfer and SPM image preprocessing pipelines coupled with in-house PET modelling and metadata annotation code, and computes parametric volumes as well as regional estimates based on FreeSurfer parcellations. Quality control is performed with in-house code and MRIQC toolbox. The compilation of MAGIA code is site-independent and can be installed anywhere. MAGIA was developed by Tomi Karjalainen and is currently maintained by Jouni Tuisku.

If you use MAGIA in your work, please cite the following paper: Karjalainen, T., Tuisku, J., Santavirta, S., Kantonen, T., Bucci, M., Tuominen, L., Hirvonen, J., Hietala, J., Rinne J., & Nummenmaa, L. (2020). Magia: Robust automated image processing and kinetic modeling toolbox for PET neuroinformatics. Frontiers in Neuroinformatics.

GIT: https://gitlab.utu.fi/human-emotion-systems-laboratory/magia

Onni: An online experiment platform

Onni (“Bliss” in Finnish) is Emotion Lab’s toolbox for online experiments. You can think it as a merger of super simple E-prime or Presentation and hassle-free Webropol / Google docs that can also handle bodily sensation mapping (emBODY measurements). It is geared towards getting fast ratings for video / audio / image / text stimuli and setting up simple questionnaires, but is easy to customise towards other uses too. Setting up experiments is really fast. Web-based user interface with simple formatting options and batch stimulus upload & questionnaire generation allows deploying studies in minutes. Data are stored centrally and accessible in R and Excel-friendly formats. You can try experiments done with the release candidate at onni.utu.fi. Onni is developed by Timo Heikkilä and Ossi Laine.

Onni (“Bliss” in Finnish) is Emotion Lab’s toolbox for online experiments. You can think it as a merger of super simple E-prime or Presentation and hassle-free Webropol / Google docs that can also handle bodily sensation mapping (emBODY measurements). It is geared towards getting fast ratings for video / audio / image / text stimuli and setting up simple questionnaires, but is easy to customise towards other uses too. Setting up experiments is really fast. Web-based user interface with simple formatting options and batch stimulus upload & questionnaire generation allows deploying studies in minutes. Data are stored centrally and accessible in R and Excel-friendly formats. You can try experiments done with the release candidate at onni.utu.fi. Onni is developed by Timo Heikkilä and Ossi Laine.

If you use Onni in your research, please cite it as follows: Heikkilä, T.T., Laine, O., Savela, J., & Nummenmaa, L. (2020). Onni: An online experiment platform for research (Version v1.0). Zenodo. http://doi.org/10.5281/zenodo.4305573

GIT: https://gitlab.utu.fi/tithei/pet-rating

emBODY: Mapping bodily basis of emotions

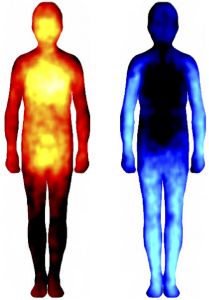

Emotions and other sensations are often experienced in the body. The emBODY tool can be used for mapping self-reported, topographical emotional sensations in distinct bodily regions. The topographical mapping concept can also be extended to numerous other applications, such as indicating location of pain or hedonic hotspots in the body, or simply annotating features of interest in photographs. emBOdY was developed by Enrico Glerean and further polished by Juulia Suvilehto.

Emotions and other sensations are often experienced in the body. The emBODY tool can be used for mapping self-reported, topographical emotional sensations in distinct bodily regions. The topographical mapping concept can also be extended to numerous other applications, such as indicating location of pain or hedonic hotspots in the body, or simply annotating features of interest in photographs. emBOdY was developed by Enrico Glerean and further polished by Juulia Suvilehto.

If you use emBODY in your work, please cite the following paper: Nummenmaa, L., Glerean, E., Hari, R., & Hietanen, J.K. (2014). Bodily maps of emotions. Proceedings of the National Academy of Sciences of the United States of America, 111, 646-651.

GIT: https://version.aalto.fi/gitlab/eglerean/embody

emBODY implementation in Gorilla

It is also possible to use the body mapping tools with the popular Gorilla online experiment laboratory using Gorilla’s Canvas Painting zone. This feature is still a beta release but to our knowledge it is perfectly stable. The main difference to our own tools are that data are stored as binary rather than integer values, and that the data format (bitmap images) is somewhat cumbersome. The Canvas Painting Zone however works well and you can see our data from art-evoked emotions acquired with Gorilla in this paper. To use body mapping in Gorilla, generate a Canvas Painting zone for your experimental display and use the desired body shape as the background image. You may download out original versions from the emotion, social touch, and sexual touch studies from this link. Vesa Putkinen from our laboratory (with a bit of help from Severi Santavirta and Birgitta Paranko) has written code for analysing the data acquired with Gorilla tools that you can download from GIT. It is based on the original written by Enrico Glerean, but accommodates to the data format in Gorilla taking care of data scaling and cleanup.

It is also possible to use the body mapping tools with the popular Gorilla online experiment laboratory using Gorilla’s Canvas Painting zone. This feature is still a beta release but to our knowledge it is perfectly stable. The main difference to our own tools are that data are stored as binary rather than integer values, and that the data format (bitmap images) is somewhat cumbersome. The Canvas Painting Zone however works well and you can see our data from art-evoked emotions acquired with Gorilla in this paper. To use body mapping in Gorilla, generate a Canvas Painting zone for your experimental display and use the desired body shape as the background image. You may download out original versions from the emotion, social touch, and sexual touch studies from this link. Vesa Putkinen from our laboratory (with a bit of help from Severi Santavirta and Birgitta Paranko) has written code for analysing the data acquired with Gorilla tools that you can download from GIT. It is based on the original written by Enrico Glerean, but accommodates to the data format in Gorilla taking care of data scaling and cleanup.

GIT: https://github.com/vputkinen/music_embody_demo

Body templates from our previous studies may be downloaded here

Mapping emotional embeddings from written texts

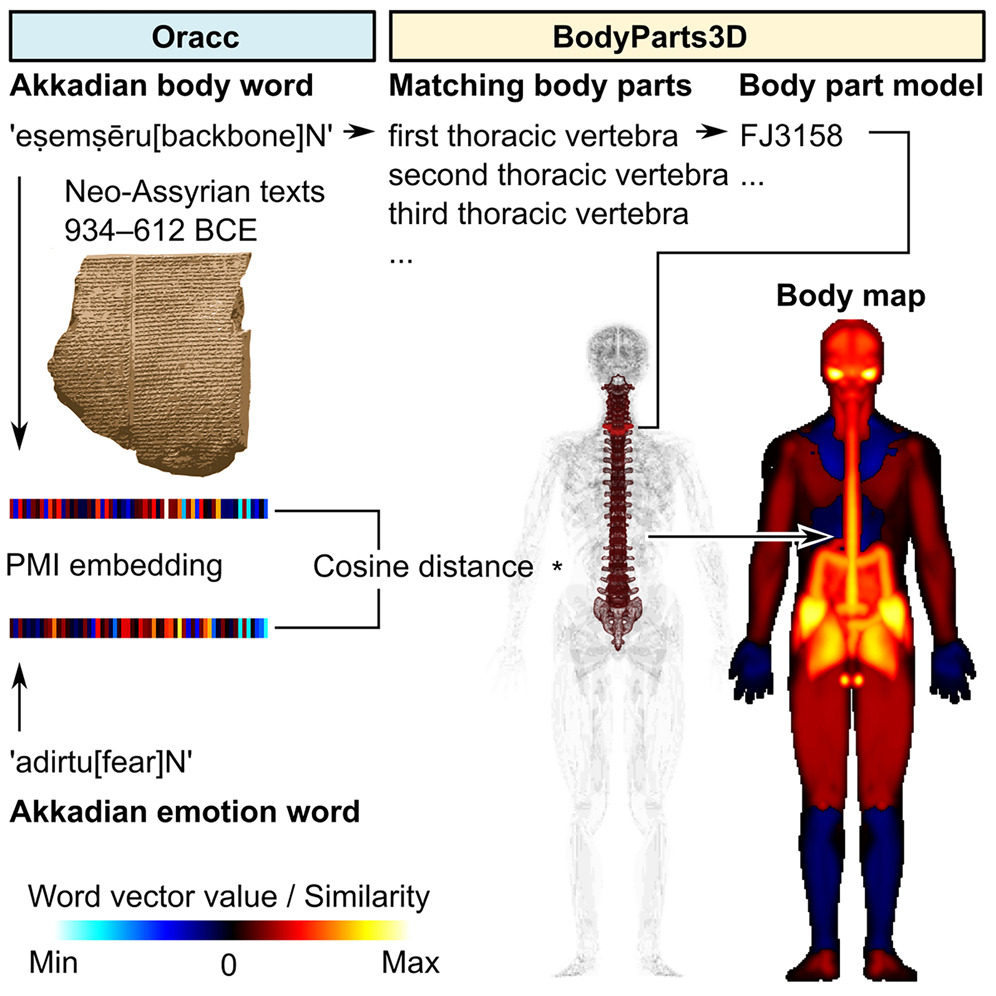

Because emotions are associated with subjective emotion-specific bodily sensations this relationship can be used for mapping emotional representations from written texts. This computational linguistic tool maps the occurrence of words pertaining to emotions and body parts from the corpus, and use using point-wise mutual information (PMI) embeddings to derive their co-occurrence patterns. These patterns are subsequently weighted by embeddings or emotion and body words and mapped on a 3D human model, providing prototypical bodily maps of emotions in the text corpus. The code was written by Juha Lahnakoski working in Jülich.

Because emotions are associated with subjective emotion-specific bodily sensations this relationship can be used for mapping emotional representations from written texts. This computational linguistic tool maps the occurrence of words pertaining to emotions and body parts from the corpus, and use using point-wise mutual information (PMI) embeddings to derive their co-occurrence patterns. These patterns are subsequently weighted by embeddings or emotion and body words and mapped on a 3D human model, providing prototypical bodily maps of emotions in the text corpus. The code was written by Juha Lahnakoski working in Jülich.

Code: https://zenodo.org/records/11242729

If you use the semantic embedding mapper in your work, please cite the following paper: Lahnakoski, J.M., Bennett, E., Nummenmaa, L., Steinert, U., Sams, M., & Svärd, S. (2024). Embodied emotions in ancient Neo-Assyrian texts revealed by bodily mapping of emotional semantics. iScience.

e-ISC toolbox

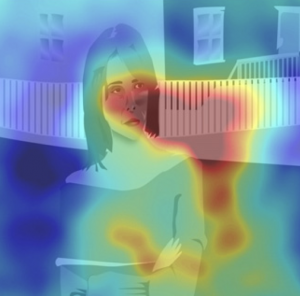

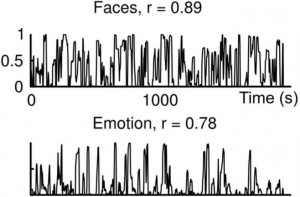

Eye movements provide an unobtrusive measure for quantifying cognition, attention and emotion. Model-based analysis of eye movements recorded during a complex high-dimesional stimulus such as a movie is however complicated. To resolve this issue, we have developed Eye Movement Intersubject Correlation Toolbox (e-ISC), which provides a straightforward way for model-free analysis of eye movements. This toolbox computes time course of the similarity of eye movements across individuals, based on sliding window heatmaps of fixation positions. The time course can be further averaged for indexing total similarity of eye movements. The e-ISC toolbox was developed by Juha Lahnakoski.

Eye movements provide an unobtrusive measure for quantifying cognition, attention and emotion. Model-based analysis of eye movements recorded during a complex high-dimesional stimulus such as a movie is however complicated. To resolve this issue, we have developed Eye Movement Intersubject Correlation Toolbox (e-ISC), which provides a straightforward way for model-free analysis of eye movements. This toolbox computes time course of the similarity of eye movements across individuals, based on sliding window heatmaps of fixation positions. The time course can be further averaged for indexing total similarity of eye movements. The e-ISC toolbox was developed by Juha Lahnakoski.

If you use e-ISC toolbox, please cite the following paper: Nummenmaa, L., Smirnov, D., Lahnakoski, J., Glerean, E., Jääskeläinen, I.P., Sams, M., & Hari, R. (2014). Mental action simulation synchronizes action-observation circuits across individuals. The Journal of Neuroscience, 34,748 –757.

GIT: https://version.aalto.fi/gitlab/BML/eyeisc

Fast Ridge Regression Toolbox

Multicolinearity poses a major problem to analysing fMRI data with high-dimensional stimulus model. Traditionally used orthogonalisation techniques are oftentimes problematic, as they yield suboptimal solutions, moreover the orthogonalised regressors and their relations are difficult to interpret. Our proposed solution to this problem is to use Ridge Regression that penalises the regressors based on their multicolinearity structure, yielding accurate and unbiased models for the predictors. This code was written by Jonatan Ropponen.

Multicolinearity poses a major problem to analysing fMRI data with high-dimensional stimulus model. Traditionally used orthogonalisation techniques are oftentimes problematic, as they yield suboptimal solutions, moreover the orthogonalised regressors and their relations are difficult to interpret. Our proposed solution to this problem is to use Ridge Regression that penalises the regressors based on their multicolinearity structure, yielding accurate and unbiased models for the predictors. This code was written by Jonatan Ropponen.

GIT: https://github.com/jjhrop/ridge_tpc

Cluster-based Bayesian Hierarchical Modelling

Bayesian hierarchical modelling (BHM) can be used for robust analysis of neuroimaging data at region-of-interest level, yet the technique is computationally prohibitive at full-volume (voxelwise) level. This toolbox allows generation of anatomically constrained, molecularly (or functionally) homogenous regions of interest. These can be used in BHM, and the results can be subsequently be back-projected to original anatomical space to yield “pseudo-full-volume” maps of the results. The GIT also contains nifty scripts for general-purpose BHM analysis. The package was written by Tomi Karjalainen.

Bayesian hierarchical modelling (BHM) can be used for robust analysis of neuroimaging data at region-of-interest level, yet the technique is computationally prohibitive at full-volume (voxelwise) level. This toolbox allows generation of anatomically constrained, molecularly (or functionally) homogenous regions of interest. These can be used in BHM, and the results can be subsequently be back-projected to original anatomical space to yield “pseudo-full-volume” maps of the results. The GIT also contains nifty scripts for general-purpose BHM analysis. The package was written by Tomi Karjalainen.

GIT: https://github.com/tkkarjal/mor-variability

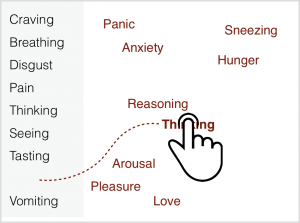

Sorting tool for fast similarity ratings

Many psychophysical, neuroimaging, and analytic (e.g. RSA) techniques necessitate obtaining the similarity structure of the stimuli, traits or phenomena under scrutiny. Direct pairwise ratings are the usual gold standard, but this becomes prohibitive as the number of tokens increases even slightly over 10. This can be circumvented using Q-sort like arrangement task, where the tokens are spatially arranged in a grid based on their similarity. This is an online implementation of the arrangement task as originally used in Nummenmaa, L., Hari, R., Hietanen, J.K., & Glerean, E. (2018). Maps of subjective feelings. Proceedings of the National Academy of Sciences of the United States of America. This toolbox was written by Enrico Glerean.

Many psychophysical, neuroimaging, and analytic (e.g. RSA) techniques necessitate obtaining the similarity structure of the stimuli, traits or phenomena under scrutiny. Direct pairwise ratings are the usual gold standard, but this becomes prohibitive as the number of tokens increases even slightly over 10. This can be circumvented using Q-sort like arrangement task, where the tokens are spatially arranged in a grid based on their similarity. This is an online implementation of the arrangement task as originally used in Nummenmaa, L., Hari, R., Hietanen, J.K., & Glerean, E. (2018). Maps of subjective feelings. Proceedings of the National Academy of Sciences of the United States of America. This toolbox was written by Enrico Glerean.

GIT: https://version.aalto.fi/gitlab/eglerean/sensations

Dynamic annotation web-tool

When working with high-dimensional stimuli such as movies or speech, the stimulus model has to account for intensity and temporal fluctuations. This is a web implementation of dynamic stimulus annotation toolbox originally used in Nummenmaa, L., Glerean, E., Viinikainen, M., Jääskeläinen, I.P., Hari, R., & Sams, M. (2012) Emotions promote social interaction by synchronizing brain activity across individuals. Proceedings of the National Academy of Sciences of the United States of America, 109, 9599–9604. This toolbox was written by Enrico Glerean.

When working with high-dimensional stimuli such as movies or speech, the stimulus model has to account for intensity and temporal fluctuations. This is a web implementation of dynamic stimulus annotation toolbox originally used in Nummenmaa, L., Glerean, E., Viinikainen, M., Jääskeläinen, I.P., Hari, R., & Sams, M. (2012) Emotions promote social interaction by synchronizing brain activity across individuals. Proceedings of the National Academy of Sciences of the United States of America, 109, 9599–9604. This toolbox was written by Enrico Glerean.

GIT: https://version.aalto.fi/gitlab/eglerean/dynamicannotations

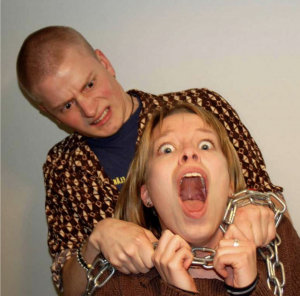

Aggressive behaviour stimuli

We have generated a standardised set of affective pictures displaying visually matched aggressive and neutral scenes for psychological and neuroimaging research. The set has been validated in both fMRI, behavioural, and eye tracking studies. The stimuli are available for download from the link below.

We have generated a standardised set of affective pictures displaying visually matched aggressive and neutral scenes for psychological and neuroimaging research. The set has been validated in both fMRI, behavioural, and eye tracking studies. The stimuli are available for download from the link below.

If you use the stimuli in your work, please cite the following paper: Nummenmaa, L., Hirvonen, J., Parkkola, R., & Hietanen, J. K. (2008). Is emotional contagion special? An fMRI study on neural systems for affective and cognitive empathy. NeuroImage, 43, 571–580.

Download: Aggression pictures

Download: Neutral pictures

Categorical emotion vignettes

Our group has developed a set of short vignettes for evoking basic emotions. The set contains brief scenarios that help subjects in framing their emotional imagery, but they can be used as “bottom-up” stimuli. The vignettes have been successfully used in both fMRI and behavioural studies. Please note that we have only validated the Finnish translations as our studies using these materials have been conducted in Finnish-speaking subjects. The stimuli are available for download from the link below, please cite this paper if you use them in your work: Nummenmaa, L., Glerean, E., Hari, R., & Hietanen, J.K. (2014). Bodily maps of emotions. Proceedings of the National Academy of Sciences of the United States of America, 111, 646-651.

Our group has developed a set of short vignettes for evoking basic emotions. The set contains brief scenarios that help subjects in framing their emotional imagery, but they can be used as “bottom-up” stimuli. The vignettes have been successfully used in both fMRI and behavioural studies. Please note that we have only validated the Finnish translations as our studies using these materials have been conducted in Finnish-speaking subjects. The stimuli are available for download from the link below, please cite this paper if you use them in your work: Nummenmaa, L., Glerean, E., Hari, R., & Hietanen, J.K. (2014). Bodily maps of emotions. Proceedings of the National Academy of Sciences of the United States of America, 111, 646-651.

Download: Emotional vignettes

We have also developed a set of vignettes that cover both basic and complex emotions. For this set, please refer to this paper: Saarimäki, H., Ejthedian, L.F., Glerean, E., Jääskeläinen, I.P., Vuilleumier, P., Sams, M., & Nummenmaa, L. (2018). Distributed affective space represents multiple emotion categories across the brain. Social Cognitive and Affective Neuroscience, 13, 471-482.

Download: Complex emotional vignettes

Dynamic emotional stories

Prolonged and variable affective stimuli are well optimised for naturalistic stimulation models. We have generated a set of ~30-s long affective stories that are suited for e.g. fMRI, psychophysiological and eye tracking experiments on semantic processing of emotions. The stories have been rated for affective valence and arousal and are currently available in Finnish. The stimuli are available for download from the link below, please cite this paper if you use them in your work:Nummenmaa, L., Saarimäki, H., Glerean, E., Gotsopoulos, A., Hari, R., & Sams, M. (2014). Emotional speech synchronizes brains across listeners and engages large-scale dynamic brain networks. NeuroImage, 102, 498-509.

Prolonged and variable affective stimuli are well optimised for naturalistic stimulation models. We have generated a set of ~30-s long affective stories that are suited for e.g. fMRI, psychophysiological and eye tracking experiments on semantic processing of emotions. The stories have been rated for affective valence and arousal and are currently available in Finnish. The stimuli are available for download from the link below, please cite this paper if you use them in your work:Nummenmaa, L., Saarimäki, H., Glerean, E., Gotsopoulos, A., Hari, R., & Sams, M. (2014). Emotional speech synchronizes brains across listeners and engages large-scale dynamic brain networks. NeuroImage, 102, 498-509.

Download: Emotional stories as Excel file

Download: Emotional stories as audio – Part 1

Download: Emotional stories as audio – Part 2

Download: Emotional stories as audio – Part 3